Naughty GETTR posts? Say bye-bye to your TWITTER account

The World Economic Forum is becoming a little concerned. Unapproved opinions are becoming more popular, and online censors cannot keep up with millions of people becoming more aware and more vocal. The censorship engines employed by Internet platforms, turned out to be quite stupid and incapable. People are even daring to complain about the World Economic Forum, which is obviously completely unacceptable.

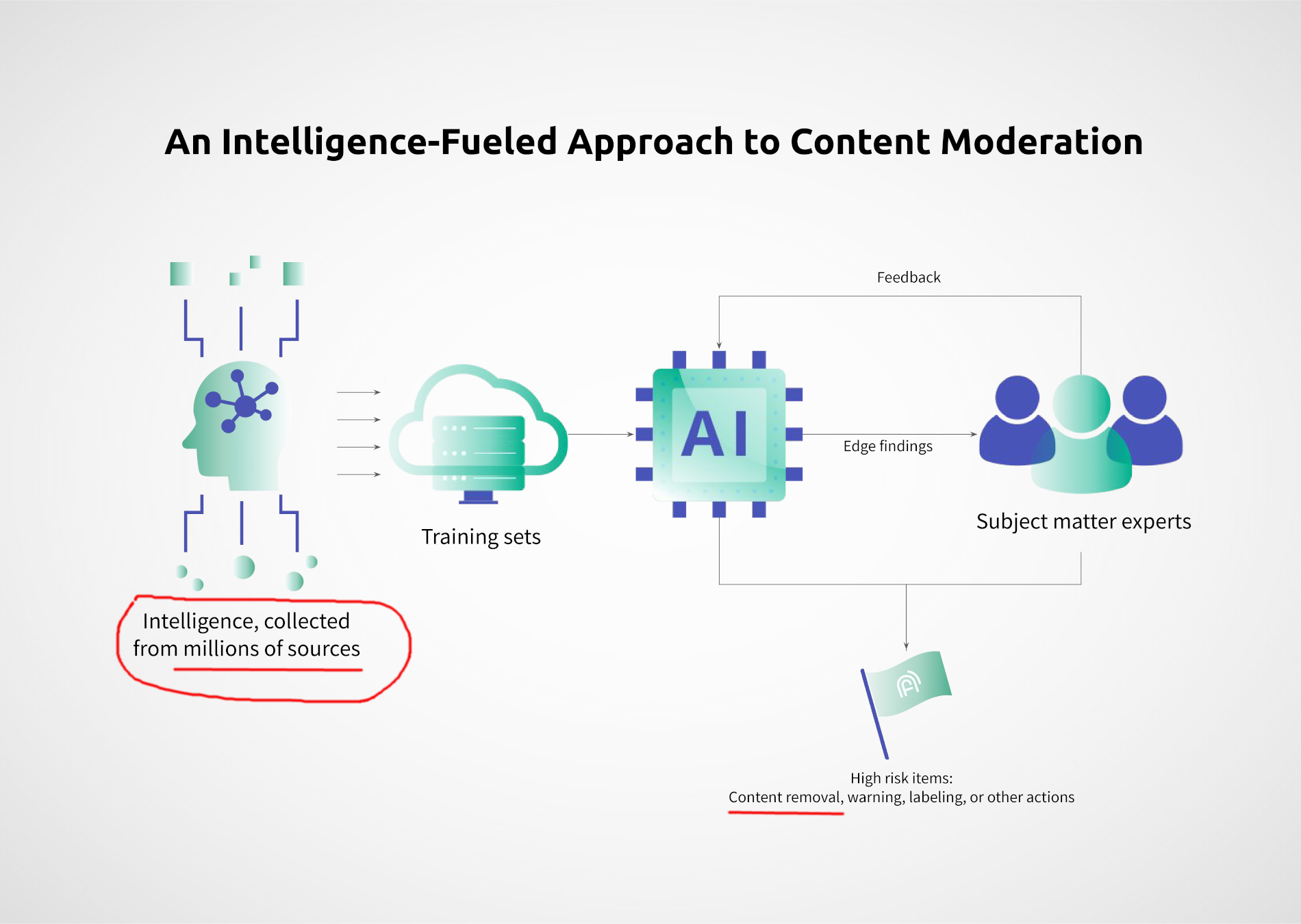

So, WEF author Inbal Goldberger came up with a solution: she proposes to collect off-platform intelligence from “millions of sources” to spy on people and new ideas, and then merge this information together for “content removal decisions” sent down to “Internet platforms”.

To overcome the barriers of traditional detection methodologies, we propose a new framework: rather than relying on AI to detect at scale and humans to review edge cases, an intelligence-based approach is crucial.

By bringing human-curated, multi-language, off-platform intelligence into learning sets, AI will then be able to detect nuanced, novel abuses at scale, before they reach mainstream platforms. Supplementing this smarter automated detection with human expertise to review edge cases and identify false positives and negatives and then feeding those findings back into training sets will allow us to create AI with human intelligence baked in. This more intelligent AI gets more sophisticated with each moderation decision, eventually allowing near-perfect detection, at scale.

What is this about? What’s new?

The way censorship is done these days is that each Internet platform, such as Twitter, has its own moderation team and a decision making engine. Twitter would only look at tweets by any specific twitter user, when deciding on whether to delete any tweets or suspend their authors. Twitter moderators do NOT look at Gettr or other external websites.

So, for example, user @JohnSmith12345 may have a Twitter account and narrowly abide by Twitter rules, but at the same time have a Gettr account where he would publish anti-vaccine messages. Twitter would not be able to suspend @JohnSmith12345’s account. That is no longer acceptable to the WEF because they want to silence people and ideas, not individual messages or accounts.

This explains why the WEF needs to move beyond the major Internet platforms, in order to collect intelligence about people and ideas everywhere else. Such an approach would allow them to know better what person or idea to censor — on all major platforms at once.

They want to collect intelligence from “millions of sources”, and train their “AI systems” to detect thoughts that they do not like, to make content removal decisions handed down to the likes of Twitter, Facebook, and so on. This is a major change from the status quo of each platform deciding what to do based on messages posted to that specific platform only.

For example, in addition to looking at my Twitter profile, WEF’s proposed AI would also look at my Gettr profile, and then it would make an “intelligent decision” to remove me from the Internet at once. It is somewhat of a simplification because they also want to look for ideas and not only individuals but, nevertheless, the search for wrongthink becomes globalized.

This sounds like an insane conspiracy theory from hell: WEF collecting information on everyone everywhere, and then telling all platforms what posts to remove, based on a global decision-making AI engine that sees everything and can identify individual people and ideas beyond any given platform.

If someone ever said that it would be contemplated, I would probably think that this person is insane. It sounds like a sick technological fantasy. Unfortunately, this crazy stuff is real, is in a WEF agenda proposal that is officially posted on their website’s “WEF Agenda” section. And WEF is not messing around.

You will have no voice and you will be happy!

World Economic Forum van Klaus Schwab stelt voor het censureren van ‘desinformatie’ te automatiseren

In een artikel op de website van het World Economic Forum wordt een lans gebroken voor de inzet van kunstmatige intelligentie bij het in de kiem smoren van internetmisbruik voordat het grote platforms bereikt.

Daarbij wordt ‘desinformatie’ in één adem genoemd met kindermisbruik, extremisme, online haat en fraude. De kunstmatige intelligentie wordt steeds verfijnder, waardoor hij internetmisbruik op den duur met bijna perfecte nauwkeurigheid weet te detecteren, valt te lezen in het artikel.

Automatiseren

De auteur merkt op dat de kunstmatige intelligentie nieuwe ‘misbruiktactieken’ niet meteen kan detecteren. En dus is er behoefte aan ‘veiligheidsteams’ die meekijken en berichten modereren.

Samenvattend: het WEF van Klaus Schwab stelt voor om het censureren van ‘haatspraak’ en ‘desinformatie’ te automatiseren middels een kunstmatige intelligentie die wordt gevoed door ‘experts’.

Hoog risico

Het proces werkt als volgt: informatie uit miljoenen bronnen gaat door zogeheten trainingsets en wordt verwerkt door de kunstmatige intelligentie. De ‘experts’ beoordelen de bevindingen van de kunstmatige intelligentie en geven feedback. Vervolgens worden items met een ‘hoog risico’ verwijderd of voorzien van bijvoorbeeld een waarschuwingslabel.