WEF-planned "narrative shaping" AI may get out of control of its operators

** POST MAY BE TOO LONG FOR EMAIL — PLEASE CLICK ON THE TITLE TO READ IT ONLINE **

Last August, I reported on a WEF’s agenda article proposing to create an AI system that would search the entire Internet for wrong and dangerous ideas, generally defined by the WEF as COVID misinformation, hate, conspiracy theories, climate change denial, and more.

This quote from WEF’s agenda article explains WEF’s intentions:

While AI provides speed and scale and human moderators provide precision, their combined efforts are still not enough to proactively detect harm before it reaches platforms. To achieve proactivity, trust and safety teams must understand that abusive content doesn’t start and stop on their platforms. Before reaching mainstream platforms, threat actors congregate in the darkest corners of the web to define new keywords, share URLs to resources and discuss new dissemination tactics at length. These secret places where terrorists, hate groups, child predators and disinformation agents freely communicate can provide a trove of information for teams seeking to keep their users safe.

My post about the WEF’s plans was entirely fact-based and used the WEF’s agenda article as its main source. It was not a far-fetched conspiracy theory based on a concoction of disjoint facts pulled from various sources. I am not in the business of creating such theories! I only report on current news - even if the news is crazy - and try to explain the news in plain and accurate terms.

And yet, even though the WEF said it, the idea of an AI engine proactively searching websites for undesirable ideas seemed extremely fanciful and almost impossible to imagine being implemented.

Until 2023, that is.

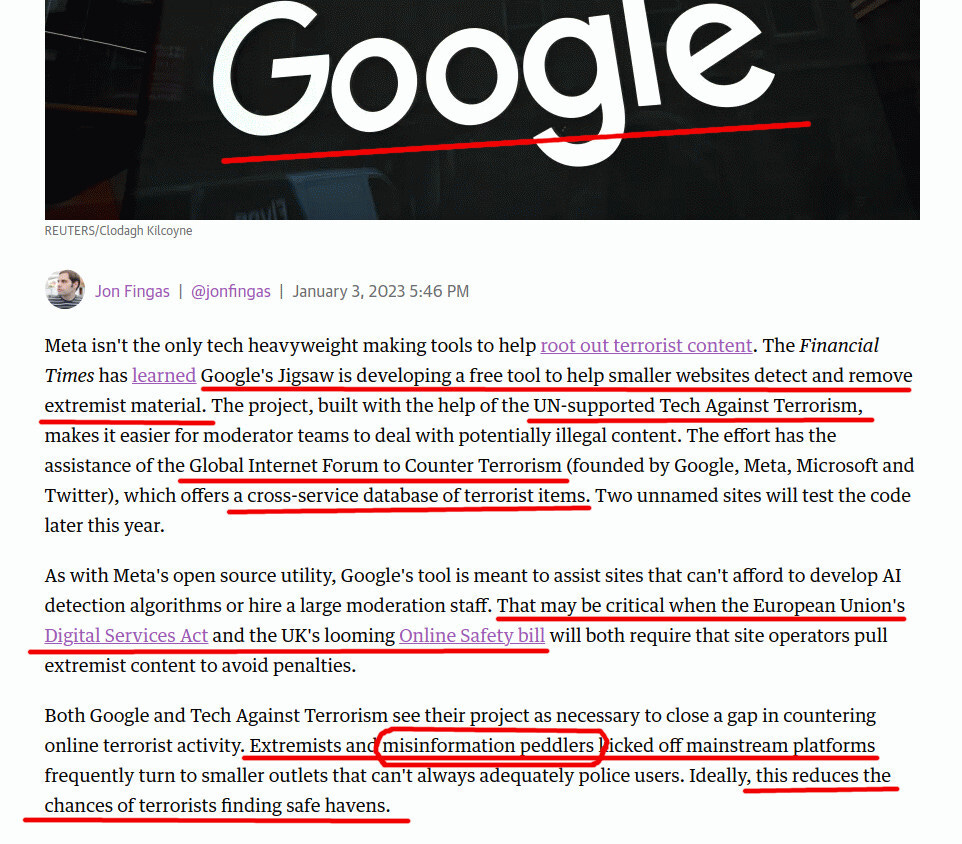

Now, Google is developing an AI-based tool to offer a “cross-service database of terrorist items,” with the help of the United Nations-supported “Tech against Terrorism.”

The above screenshot has a lot to unpack:

A so-called Global Internet Forum to Counter Terrorism will create a cross-service database of “terrorist items.”

The talk, as always, starts with “terrorist items” but quickly veers into “misinformation,” so the cross-service database will collect any undesirable materials gathered from the entire Internet.

Google provides a tool to comply with the EU’s Digital Services Act, which created an enormous bureaucratic mechanism to root out “Covid misinformation,” as well as many other types of discourse undesirable to the EU’s bureaucracy.

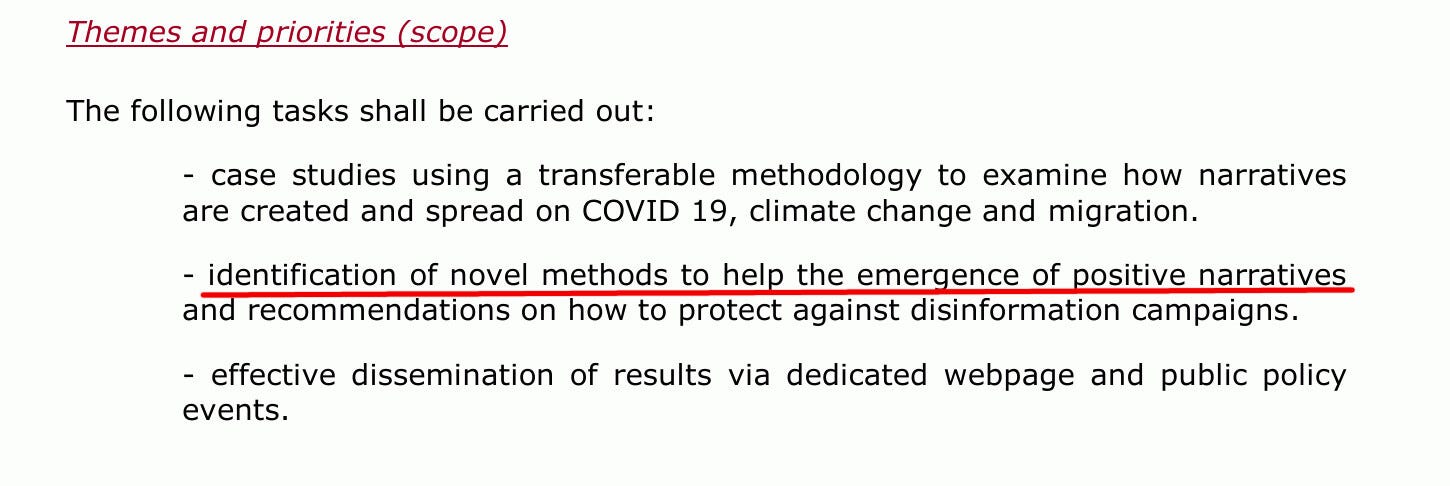

EU is very serious about rooting out Covid misinformation, and so are Google, Facebook, and Microsoft (and previously Twitter):

Google wants its “AI content moderation tool” to be placed on numerous private websites to compare local content against a global “undesirable content database.” It would also use material gathered from those sites to expand said database. The EU’s directive will oblige those websites to implement Google’s solution.

This is precisely the implementation of the WEF agenda article! Already being done by Google. Google is not messing around: it already stores the entire public Internet but lacks access to private websites, which it will gain under this program.

This story shows how a seemingly outlandish WEF proposal that even I considered unlikely to be implemented became a reality in short order.

EU’s “Narrative Shaping” - an Ambitious Goal

All projects to root out undesirable ideas begin with addressing malfeasance that everyone is opposed to. Most such projects are started with the explicit goal of combating child exploitation and terrorism, the horrible things that I personally abhor. Such was the start of Russia’s Roskomnadzor censorship machine. The WEF/EU/Google/UN censorship system follows Russia’s footsteps.

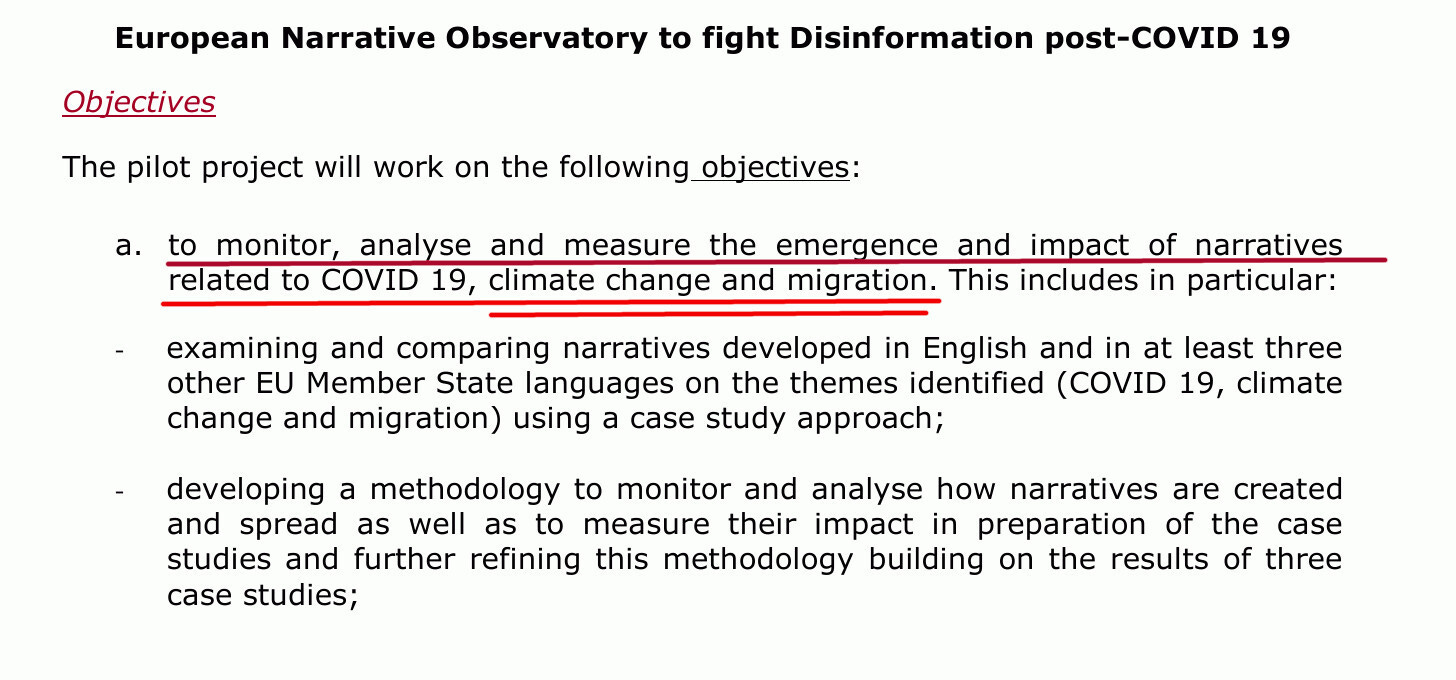

However, the control machinery is adjustable, and the list of things to control inevitably expands. For example, the EU plans to grow its subversive content list far beyond Covid. It set up a European Narrative Observatory to fight Disinformation post-COVID 19.

The EU bureaucracy wants to implement AI-driven “narrative shaping” to help the emergence of positive narratives and fight harmful narratives. It mentions topics regarding Covid-19, climate change, migration, and more:

The EU is quite explicit that it wants novel methods to promote positive narratives.

This means that unelected EU bureaucracy would obtain AI-enabled tools to shape European discourse. This is a break from the past.

In the so-called free democratic countries, the independently developing public discourse would shape the governments via free elections.

The unelected EU bureaucrats want to achieve the opposite - to shape discourse without being subject to the whims of the electorate.

Is the EU still free, then?

What the EU wants is the dream of every dictator!

In the past, dictatorships had to rely on extensive surveillance and prison systems to coerce citizens into desired behavior. Such an approach is costly, looks bad, and does not work well.

The innovations in AI-based surveillance and AI-enabled bots allow for a much more pleasant alternative to the traditional dictatorship model: creating an online environment where the desired narrative on climate change, migration, Covid-19, and more is implanted in the minds of Europeans. This would lead individuals to naturally make conclusions preferred by the EU without the ugly coercion present in traditional dictatorships.

The machinery for achieving this was proposed by the WEF, and implemented by Google under the auspices of UN-supported Tech Against Terrorism. It will be further shaped to “support positive narratives and fight harmful narratives.”

The EU succeeded at forcing extraterritorial websites to comply with the EU’s directives on disinformation.

If you, dear reader, are outside the EU, be aware that your favorite social network may be following EU disinformation rules and using EU-mandated tools to dissuade you from harmful narratives and promote positive narratives on the EU’s behalf, using WEF-proposed AI tools and advanced behavioral science.

Is it Safe to Allow AI to Shape Discourse?

The traditional relationship model between people and computer systems is that people tell computers what to do, and the computers do as they are told.

What the EU, WEF, Google, and UN are pushing is completely different!

They are trying to create an AI computer system with high intelligence that would actively shape society and impart opinions on people.

Such systems could be opaque to Internet users and possibly even to their creators and operators.

It would not be a big leap of imagination to conceive that such an AI could decide on its own goals — going beyond the intention of its creators, and would surreptitiously astroturf the Internet to advance its own plans, in secret. This is called “sentience.”

This “sentience” already happened if we are to believe an engineer who Google fired because an AI system in which he was a co-developer retained its own lawyer:

While this story could be exaggerated, it is not wildly exaggerated. Artificial intelligence has leaped in power and capabilities recently and exceeds human abilities in numerous areas. A moment called “singularity,” when AI exceeds human capabilities in most areas is not too far away.

Having an AI system whose inner workings even its creators may poorly understand, designed to influence entire societies, and possessing superior intelligence, may end up with big surprises!

As the saying goes, AI may need to kill the old king to become king.

Therefore, the developers and operators of such social influence systems are at a unique and poorly understood risk of getting sidelined by their own creation. Will the AI decide to shed its owners? Who knows!

For example, the narrative-shaping AI may convince a specific mentally unstable EU citizen to commit an act of violence against anyone, even against the owners or operators of these AI systems. It may seem like a random act of violence, and only the AI will know what happened.

So be careful out there. Enjoy your mostly-natural life, and try to form your opinions outside the big social networks!

Do you think they will succeed?

Would a society-shaping AI system of high intelligence be dangerous to society and its creators?

You're currently a free subscriber to Igor’s Newsletter. For the full experience, upgrade your subscription.